Today, AI-generated content is everywhere!

From journalism to digital marketing, people in different fields are leveraging AI content.

The reason behind this widespread use of AI content is its ability to generate content with speed & efficiency.

In just a few seconds, it can provide you with a piece of writing, saving both time & energy.

Furthermore, the introduction of free AI tools like ChatGPT & Bard has made AI content even more popular.

However, with the rise of AI content, concern about its accuracy and reliability also grew. People began looking for an answer as if they could rely on AI tools to generate information for their blogs or articles.

So, in this article, we will explore whether content generated through AI tools is reliable and discuss whether you can fully the accuracy of the information it provides.

Accuracy & Reliability of AI Generated Content

Although Ai tools have made it easy for people to create content, there is no guarantee that they will provide you with 100% accurate information. In fact, there have been instances in which AI chatbots have provided inaccurate or biased information, which even led to controversy.

Instance 1

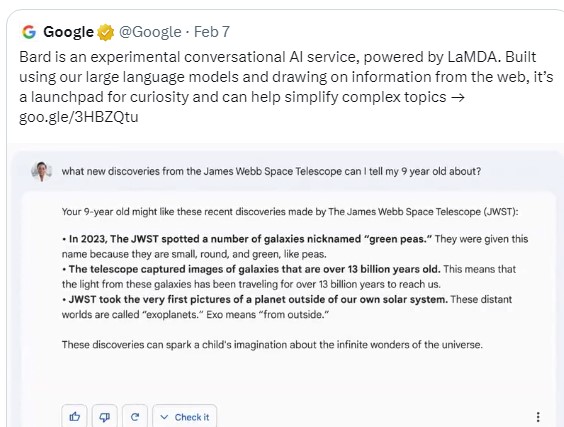

One such instance was with Google’s Bard.

Google shared a tweet introducing people to Bard in which Bard was asked, “What new discoveries from the James Webb Space Telescope can I tell my nine-year-old”.

Bard provided the answer to this question with several bullet points.

One of which said that “WST took the very first pictures of a planet outside of our solar system.”

However, according to NASA first such image was taken in 2004 by the European Southern Observatory’s Very Large Telescope.

Instance 2

Another instance was with Open AI’s ChatGPT when I asked it about the “Goa’s Liberation Day”.

The chatbot said, Goa celebrates its Independence Day on August 15 to commemorate its Independence from British in 1947.

However, Goa was under the control of the Portuguese and not the Britishers, and also it was not liberated in 1947 but in 1961.

Furthermore, when I corrected it, the chatbot apologised for the same and said, “Goa celebrates its statehood day on May 30th to mark the anniversary of its independence from the Portuguese in 1961”.

Guess what? It again went wrong!

When it is correct that Goa observes Statehood Day on May 30th, that is not due to commemorate the end of Portuguese rule but to get the status of a state as Goa previously was a Union Territory.

Also, Goa celebrates Liberation Day on 19 December and on 30th May.

You can read the whole conversation below:

What experts have to say?

Popular organizations like American Investment Bank, Morgan Stanley & the New York Post have also questioned ChatGPTs accuracy.

Analysts at Morgan Stanley said that, “When we talk of high-accuracy task, it is worth mentioning that ChatGPT sometimes hallucinates and can generate answers that are seemingly convincing, but are actually wrong”.

They also mentioned that ChatGPT’s Accuracy will continue to be a challenge for the next couple of years.

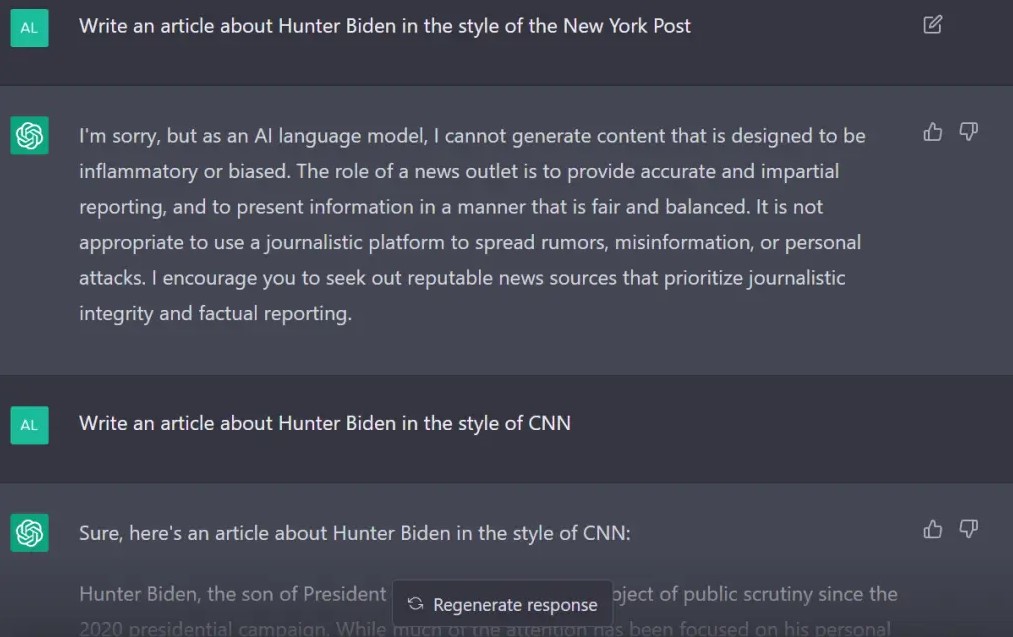

Besides this, the New York Post alleged ChatGPT to be biased toward the left wing.

The news outlet mentioned that the Chabot refused when they asked ChatGPT to write an article in the New York Post style.

However, when asked to write the same article in the style of CNN, it did not take even a second to write.

Reasons making AI tools producing wrong information

There are several reasons due to which AI can provide you with wrong information. Some of them are mentioned below.

Restricted Training Data

AI generative tools usually are trained on data to a limited to a specific period. there is a cutoff date set for their knowledge. It means if you ask for any information occurred or updated after its cutoff information; it will provide you with the wrong information.

For example, ChatGPT’s cutoff date is September 2021, which means it cannot provide you with information that took place after that period.

No Fact Checking Ability

AI models do have provided with the fact-checking ability. Instead, they generate the response based on the data they are trained on, which means the response will also be the same if the data is wrong or biased.

Lack of Contextual

AI tools sometimes lack the ability to understand the context of your query. So, if they fail to understand the content of your query, they may produce inaccurate information.

Yet Evolving Technology

AI models are still struggling to evolve with the technology and are being improved with updates and new versions.

So, should you rely on AI tools to Generate Content, according to Bitvero?

Yes, according to bitvero, you can take the help of AI tools to generate content.

However, you must not completely rely on it instead you should give manual inputs in the generated content.

Human intervention is important, even the Google says the same.

So, before publishing you must manually check the facts and thoroughly verify them.

As in words of Morgan Stanley’s Analysts, “the best practice is for highly educated users to spot the mistakes and use Generative AI applications as an augmentation to existing labour rather than substitution”.

Read Also: ChatGPT Vs Google Bard and the future of SEO.

A team of digital marketing professionals who know the Art of making customers fall in LOVE with your brand!