Dall-E is a generative AI technology just like ChatGPT. However, instead of generating texts, as in the case of ChatGPT, Dall-E 2 generates images from text prompts. It was introduced by OpenAI as an enhanced version of Dall E- also a generative model developed previously.

As it is an enhanced version, it can turn any imagination into an image, no matter how creative or bizarre. It can even generate images of things or concepts that do not exist in reality.

One such example of this is an image of “A teddy bear on a skateboard in Times Square” generated by Dall-E 2.

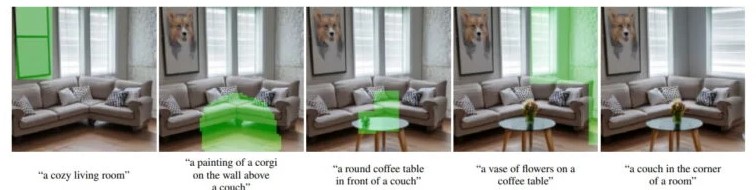

Apart from generating images, the model also excels at edit or modifying the existing images.

It can edit an existing image by adding objects that are not there in the scene and can even replace them as well.

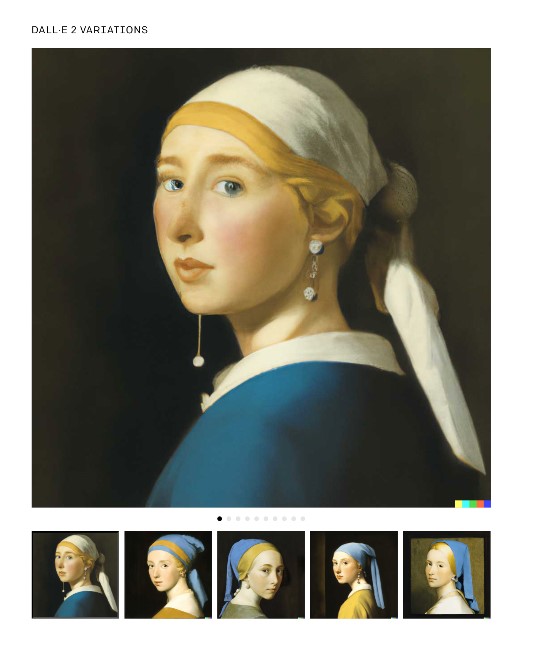

You can also ask it to create variations of an image with different angles and styles.

It is so good at painting imaginations into images that its developers named it after the names of two artists: Salvador Dalí, a surrealist painter, and WALL-E, a robot from the Pixar movie of the same name.

Salvador Dalí a Spanish surrealist painter who is famous for his bizarre and dreamlike artworks.

WALL-E is a small robot who is fascinated by human culture, and he often creates art of his own. WALL-E is also known for his creativity and ingenuity.

Now that you are aware of Dall-E 2 let’s understand how it works.

Note: Get to know about the Top 5 AI Video Generators to use for social media marketing

How does Dall-E 2 work?

Dall E 2 relies on several technologies to perform its work, including natural language processing, large language models and diffusion models.

Natural Language Processing – helps the model to understand the meaning of the given input or, to be more precise, the text input.

LLM– encodes the text and images in a way that tells the semantic information of the text. OpenAI has developed its own LLM called the CLIP, a part of Dall E 2.

Diffusion Model- mainly responsible for the task of generating images.

Let us first understand CLIP and the diffusion model and their functions. Then, we will learn how they collectively work together to help Dall-E 2 generate images.

CLIP

CLIP, which stands for Contrastive Language-Image Pre-Training, is a multimodal LLM trained on a large dataset of images with their captions.

As the name suggests, it is a “contrastive” model, meaning it does not predict the images for the given input instead, it compares the given query with the captions of its dataset’s images to identify if the input corresponds to any of the image captions in the dataset.

For this, each image-caption pair is assigned a similarity score and any pair that has a high similarity score is considered the best match for the given query.

Suppose the given prompt to Dall-E 2 is “cat.” Now, the CLIP goes through its image dataset to find the match for the query. In case the data has some images that include captions like “My cute cat is sleeping.” or “the white fluffy cat is sitting on the window”, will get a higher similarity score.

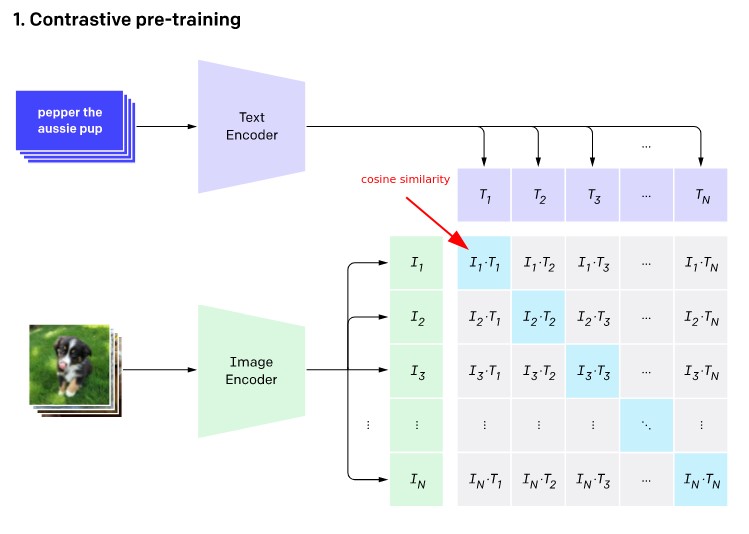

To perform the whole process, CLIP relies on two components-

Text Encoder– turns captions or text into text embedding (embedding means a numerical value). Basically, it works as NLP, which means it understands the meaning of human input and then turns it into a numerical value that Dall E 2 can understand easily.

Image Encoders- turns images into image embeddings.

Now, it compares the value of both, i.e., text and image encoders, called the cosine similarity. In case the values are the same, that means that they both share similar semantic information.

Better understand this with the given representation.

In the example, L1 is the embedding of the first image, and T1 is the embedding of the first image’s caption and values in the matrix are the similarities between the intersecting embeddings. The blue highlighted cells have the highest value, and the grey ones have the lowest value.

Diffusion Model

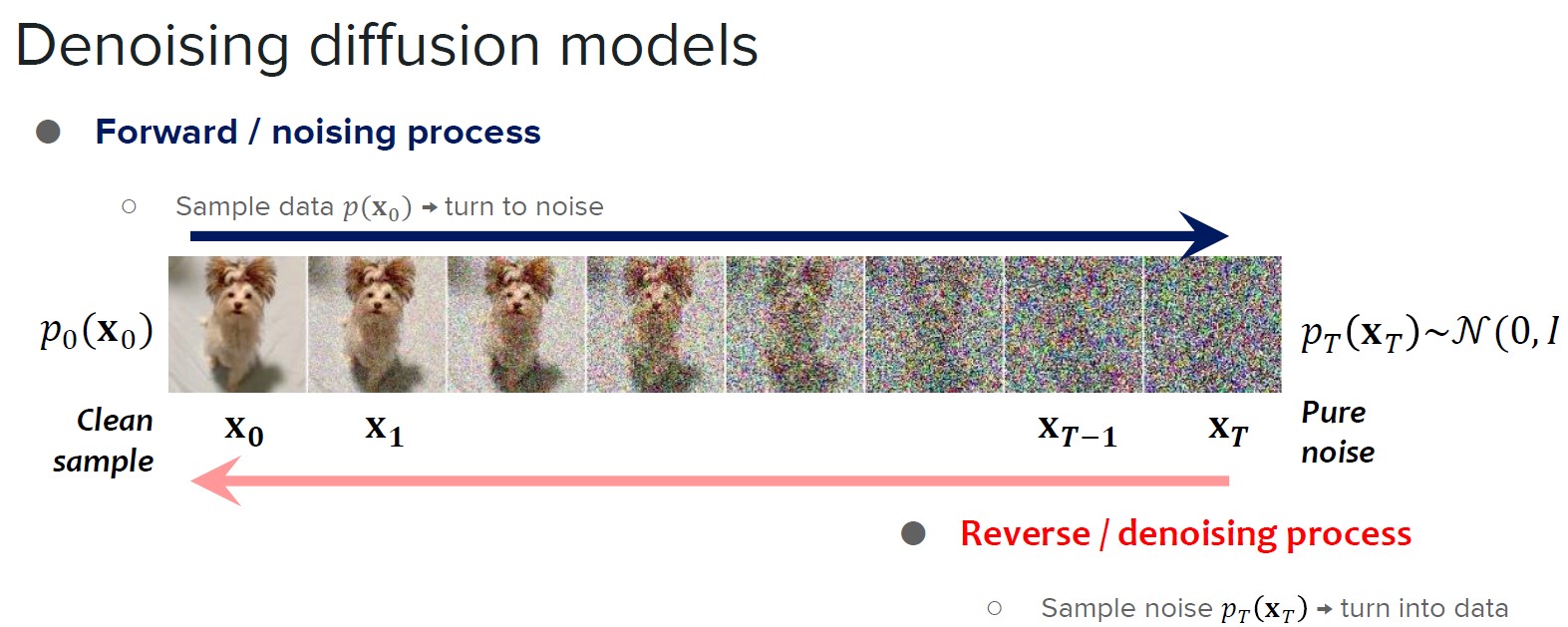

It is the actual model responsible for the generation of images. The internal working of it is quite simple and can be divided into two components.

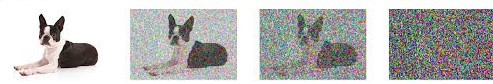

Diffusion Prior- starts from taking a clean image and gradually adds or diffuses noise to it until it becomes completely blurred. That means several versions of the same image are created in a sequence, with each version being noisier than the preceding one. The process is called the forward diffusion process.

Diffusion Decoder- It does the opposite of what prior did. That means it takes the blurred or degraded image and starts removing the noise step by step until the image gets back to its original form or, can say, closest to its original form. This is known as the denoising or reverse diffusion process.

This process of diffusion and denoising creates a trajectory of images that represents the data distribution. By learning the dynamics of this trajectory during training, a diffusion model makes itself able to generate new images that resemble the data it was trained on.

As you are aware of CLIP and diffusion models, let us understand how they collectively work in Dall-E 2 architecture.

Also Read: AI-generated content: Accuracy & Reliability

Dall-E 2 Architecture

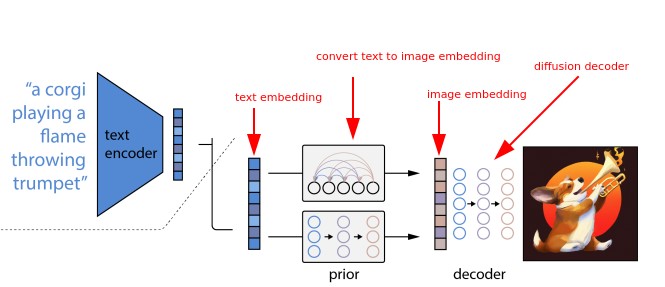

You provide Dall-E 2 with a text prompt.

This prompt is taken by CLIP’s text encoder, which understands its meaning using NLP and then converts it into a text embedding.

Now, this text embedding is passed to the prior.

Prior accepts the CLIP text embedding as input and generates a CLIP image embedding out of it.

Finally, this CLIP image embedding is now used by the decoder to generate the image.

The diffusion decoder used here is inspired by OpenAI’s GLIDE.

What is Glide?

Glide itself is an image generation model but not the same as the prior diffusion model. GLIDE includes embedding the text given to the model to create an image, making the process rely only on the text embedding.

However, Dall-E 2 uses an enhanced version of GLIDE, as the decoder is set up, so it does not only include text information like in GLIDE but also includes clip image embedding to support the image generation.

The combination of text embeddings and image embeddings allows the diffusion decoder in DALL-E 2 to generate more realistic and creative images.

That’s it

I hope the article provided you with a basic idea of how Dall-E 2 works. Of course, the internal workings of it rely on complex mathematical equations that obviously require you to have a technical background for a deep and proper understanding.

Thus, the explanation given here is that’s what we (in case you are also) need to know about Dall-E’s 3 functioning as a non-technical person.

A team of digital marketing professionals who know the Art of making customers fall in LOVE with your brand!

Pingback: Google Relaunches AI Image Creation with New Safeguards - Bitvero Digital Marketing